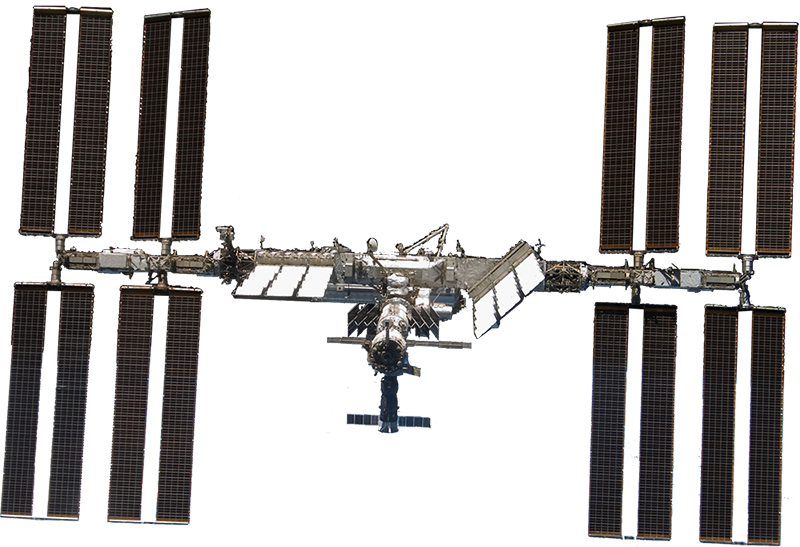

After years of assembly, module by module, the International Space Station (ISS) is finally complete.

Now, the focus turns to payload science – performing scientific experiments in zero gravity. Crew members have schedules planned out down to the minute with such tasks, and must follow procedures to perform tasks efficiently and effectively.

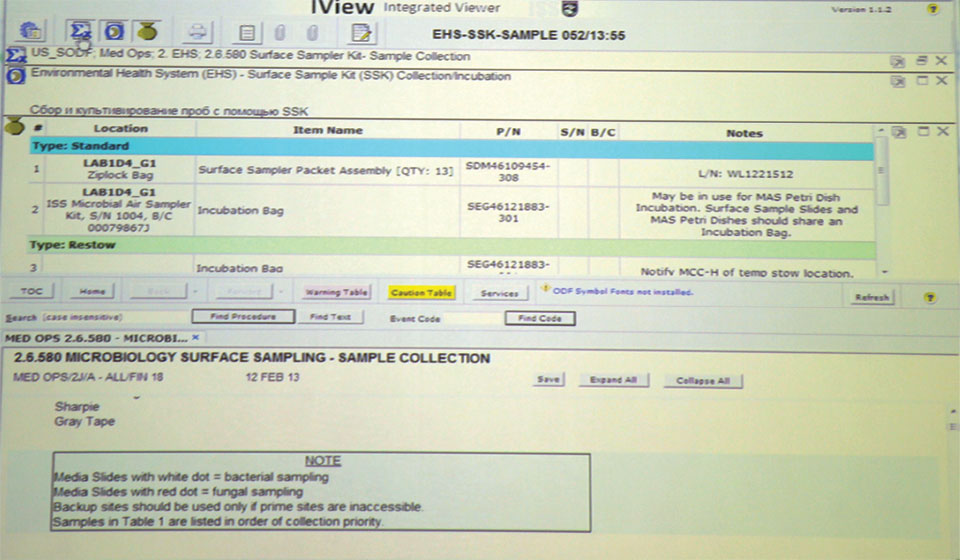

The International Procedure Viewer (IPV) prevent crew members from operating at maximum efficiency.

Procedures within the IPV are static and do not represent the ad hoc nature of the actual tasks. More visual assistance is also necessary, especially for complex procedures. Furthermore, the IPV is stationary, forcing crew members to travel back and forth throughout the ISS.

We are designing, prototyping, and building a procedure viewing device that:

- Streamlines information displays

- Adapts procedure based on context

- Allows crew member mobility

- Provides means for hands-free interaction

OVERVIEW

After completing a literature review of our problem space and a competitive analysis of procedure viewers, we dug into the meat of our research: domain-specific and analogous user research.

Using UX methods like contextual inquiry, directed storytelling, and card sorting, we interviewed 27 domain experts:

- 2 Astronauts

- 9 NASA employees

- 5 Pilots

- 4 Deep sea divers

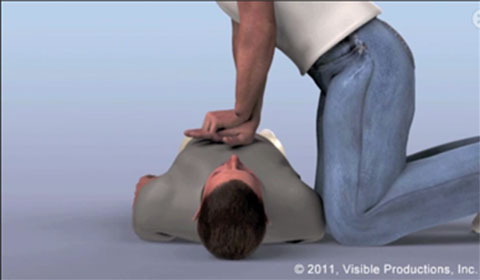

- 2 Paramedics

- 2 Lab technicians

- 2 Construction managers

- 1 Pit crew member

PRELIMINARY

Our literature review focused on the following:

- Psychology of space flight

- Structure of ISS/living in space

- Organization and presentation of procedures

- Cognitive processing of tasks

- Collaboration

The articles we read gave us a number of critical insights that informed our approach to user research, such as:

- Isolation and lack of on-the-ground normalcy can increase anxiety, insomnia, and depression in crew members.

- Procedure display methods proven effective across various domains include checklists, augmented reality displays, and in-context displays.

- High mental workload can decrease performance, especially when interruptions are forced during those periods of heightened workload.

DOMAIN-SPECIFIC

Our research began and ended with astronaut interviews, with both having served as commanders. One had worked on the Mir space station, whereas the second was on board the ISS as recent as 6 months before the interview.

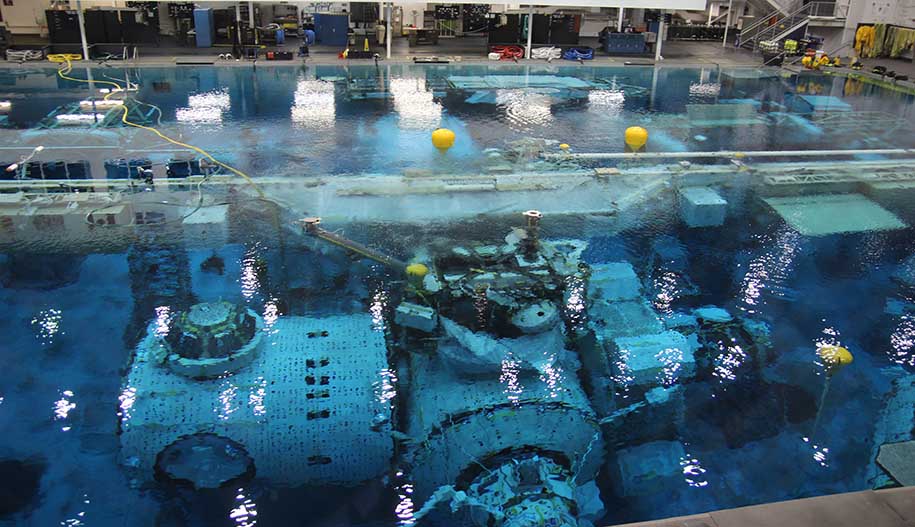

At the Johnson Space Center in Houston, we interviewed experts within Operations Planning, Payload Science, Human Factors, Next Generation Scheduling, Wearable Computing, and Flight Deck of the Future. We also toured the Space Vehicle Mockup Facility and the Neutral Buoyancy Laboratory.

ANALOGOUS DOMAINS

Due to the expected difficulty in accessing astronauts, we investigated domains with parallels in workflow and environment. We focused on the following five metrics:

- Is documentation required while completing a task?

- Are there a variety of tasks present?

- Does the work involve ad hoc tasks?

- Are tasks executed in an isolated environment?

- Does the work require the use of tools?

PEER

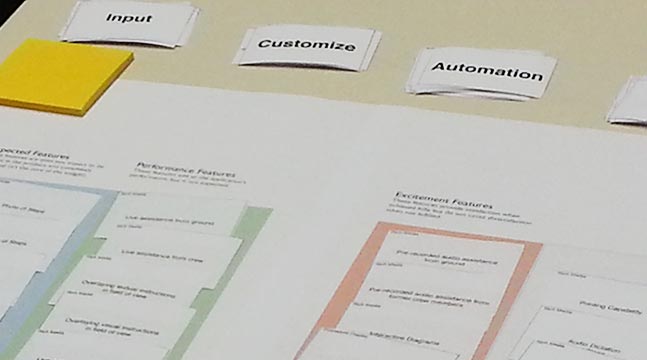

Based on our user needs, we developed PEER, a Procedure Execution with Enhanced Reality system for head-mounted displays.

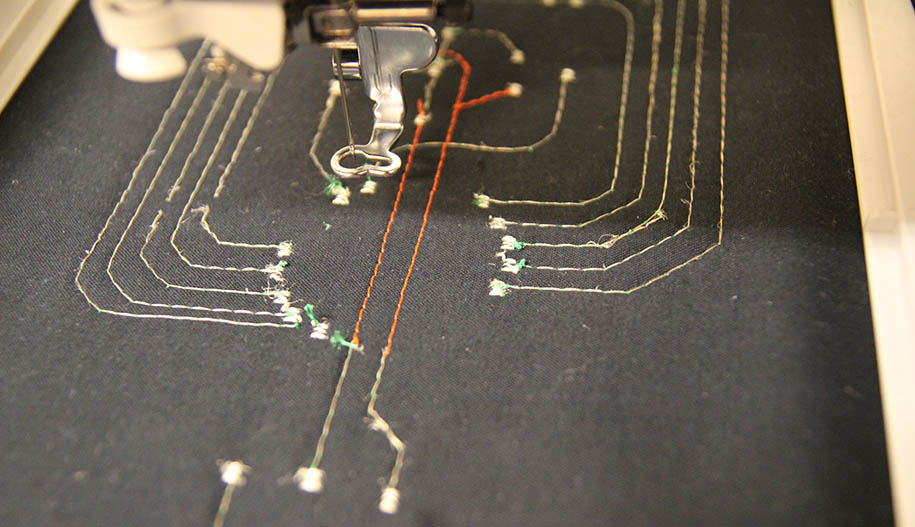

PEER is an Android application designed for the Epson Moverio head-mounted display, using voice control to provide users with a completely hands-free experience.

Core features include:

- Visualizing information and directions using images, videos, and augmented reality

- Navigating through steps in a highly granular fashion

- Displaying information when and where it is needed

- Accelerating data analysis using object recognition

- Modularizing procedure authoring with an extensible XML-based paradigm

VISIONING

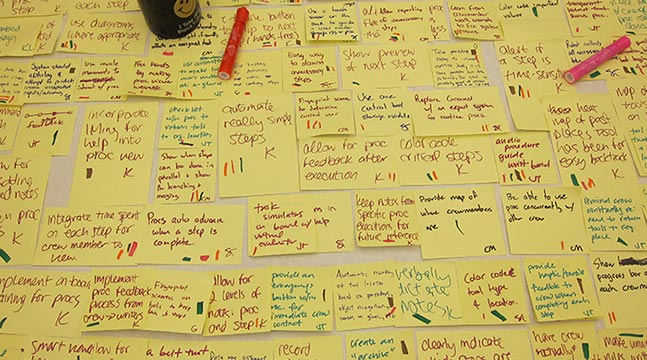

After synthesizing our research, we used our findings to jumpstart our ideation process.

We let our ideas go broad. Unwilling to let technological constraints limit our scope, we generated over four hundred initial design ideas. These were later consolidated into a few distinct visions that we hoped to pursue.

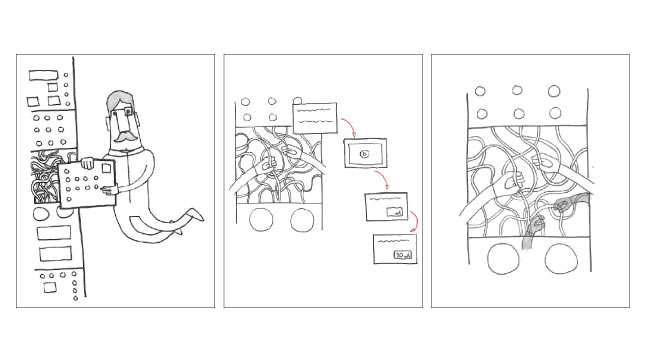

After presenting our ideas at the NASA Ames Research Center, we narrowed down our design direction through a series of visioning activities with our clients. After further research at the Augmented World Expo, we ultimately decided to pursue procedure execution on the head-mounted display.

PROTOTYPING

Designing for an head-mounted display was a challenge, but our rapid iteration cycle quickly finetuned our interactions.

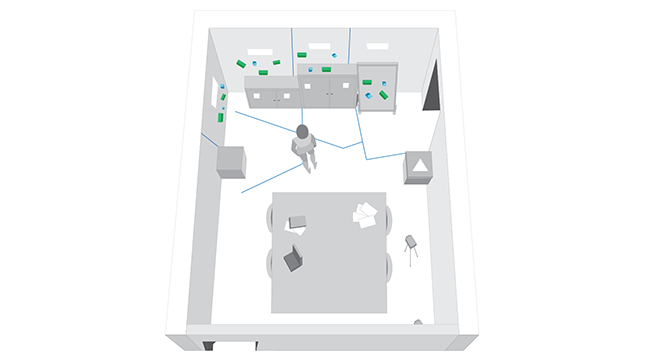

We began our prototyping process by creating a simulated ISS environment, as well as an analog testing procedure. With these, we conducted usability tests with 24 participants across 6 iterations of our interface.

In order to adequately test our interface, we had to simulate the visual experience of a see-through, wearable interface that follows your gaze and listens to your voice. This forced us to devise new prototyping methods, such as using transparencies or remotely controlling our head-mounted display through a secondary device.

FUTURE VISION

Our prototype was limited by the hardware available and our timeframe. However, given the necessary resources, we envision PEER addressing the following:

Stephen holds a Bachelor's in psychology from the University of South Florida. Stephen enjoys spending time with his wife, computer games, and learning new acronyms.

Kristina has a Bachelor's in linguistics from New York University. As a Pittsburgh native, she's excited to finally live in a place where she can see the sun more than twice a year.

Jenn has a Bachelor's in history, with minors in chemistry and sociology from the University of California, San Diego. After the program, she wants to trek through the Himalayas.

Gordon holds a Bachelor's in both computer science and business administration from the University of Washington. In his free time, he enjoys playing basketball and computer games.

Chris earned a degree in Computer Science from Loyola Marymount University. Chris enjoys music, playing beach volleyball, and is a die-hard fan of the Arizona Cardinals.