Big Data, Big Work

Collecting more than 80,000 applications over the last decade, ApplyGrad is a cornerstone to admissions at Carnegie Mellon’s School of Computer Science. In recent years, the system has had to process a record-breaking benchmark: 10,000 applications across the 47 masters and doctoral programs. Although growth is often exciting, the number of reviewers and time to review are limited. The workload grows increasingly impossible for reviewers to do. Simply put, the current infrastructure is not sustainable. This is where we, Team Super Cool Scholars, come in to envision the future of ApplyGrad. Over the course of 6 months, we investigated how to make the monolithic system become more efficient, effective, and fair for the short-term and long-term.

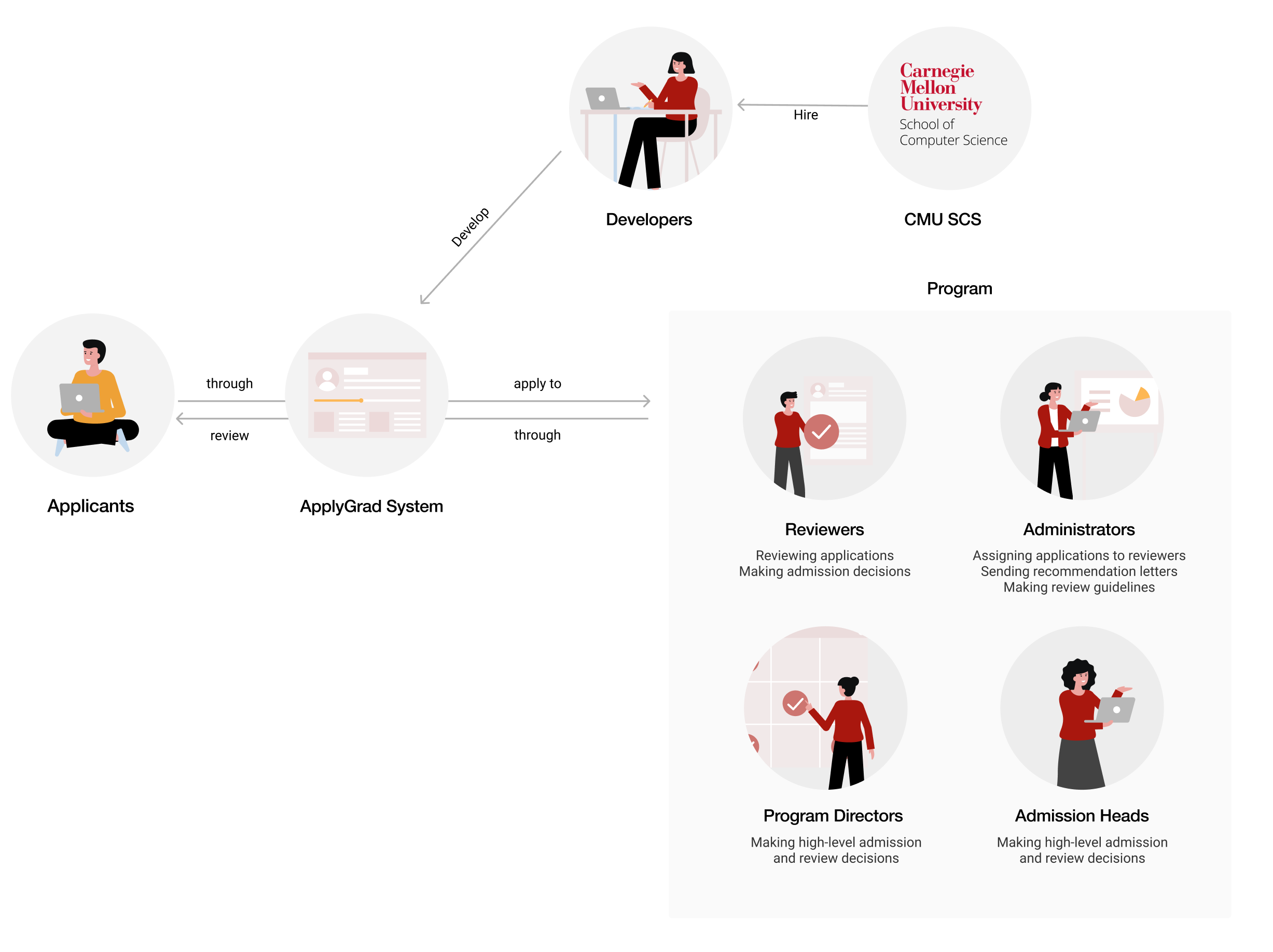

Stakeholder Analysis

Reducing Reviewer’s Cognitive Burden

During our journey, we discovered that there are many aspects of ApplyGrad that hinders a reviewer’s natural workflow, ability to synthesize material, and make judgements about fairness. Reducing cognitive burden matters because it has the power to positively affect the trajectory of many people’s lives. Reviewers are currently working long hours outside of work to review, often skipping self-care routines because they don’t want to miss a suitable candidate. They know their reviews have an impact on the applicant’s education and dreams. We must imagine a better system so that reviewers can get the proper rest they need to make the right decisions about the thousands of applicants.

Researching & Designing in Three Horizons

We made plans for improvement in 3 horizons: UX, Automation, and machine learning (ML). We see that the future must account for both short-term and long-term needs in order to be sustainable. Most of the UX horizon are the short-term goals that we set. It’s the critical and minimally viable improvements that can be made to improve the reviewer’s immediate experience. Meanwhile, automation is a mid-term goal, where elements to improve are just as crucial but implementation may take a longer time. Finally, we drafted dream goals for the future through ML.