Problem Statement:

“How might we bridge the gap between the controlled world of experiments, and the unpredictable real world?”

“Genchi Genbutsu”

“Go to the place”

HRI stands by the Japanese principle, “Genchi genbutsu”. It translates to “Go to the place”, and means that fully understanding a given problem requires examining it at its original location—which in HRI’s case, is through field testing.

現地現物

Four Challenges Stopping Field Testing

However, fulfilling this principle is not that easy in real life. There are a few major obstacles that hinder HRI researchers’ ability to conduct field testing for human-AI teaming.

Challenge One

Research can be disconnected from product development

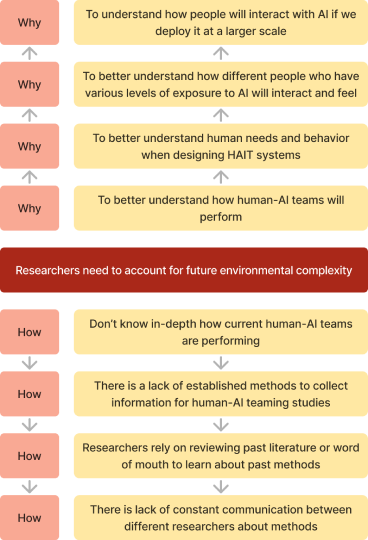

Using abstraction laddering to dive into the “why’s”

Accounting for environmental variables that were previously unanticipated at the testing stage allows researchers to have a wider range of understanding of how different people interact with AI

Researchers would be motivated to utilize field testing methods more often, allowing them to gather more real-world insights faster and make it a more regular research practice

Have more real-world insights also allows researchers to more easily translate research into actionable product directions, bridging the gap between research and business

Current barriers to field testing:

Lack of existing metrics and measurements for studies

Resource-intensive nature of field testing

Uncertainty about how field testing would be valuable to researchers’ work.

Challenge Two

Organizational silos can lead to redundant efforts

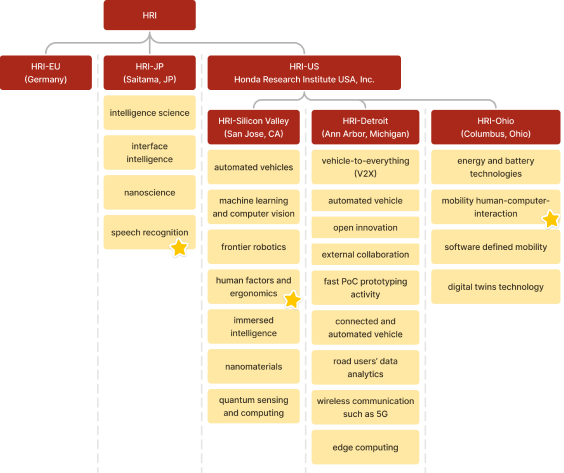

Going into the Kickoff, we knew through the prompt that we would be working with internal stakeholders. We thought the best way to understand our key users would be by mapping out the structure. We validated the functions and organizational system during the kickoff meeting. The diagram on the right was our final iteration of the diagram.

Reduced information sharing

From the organizational breakdown of HRI, we noticed that silos created reduced overall information sharing amongst researchers at Honda Research Institute. Let’s take researchers from HRI Japan and North America. For example, they may be working through problems in similar domains but, may be unaware that they are tackling the same problem. This leads to inefficient resource allocation, increased cost of research, and missed opportunities for innovation.

Challenge Three

Field test is difficult and resource

In-the-field complexities

“Researchers prefer to start their research with a simple setup to remove distractions” -HRI Researcher

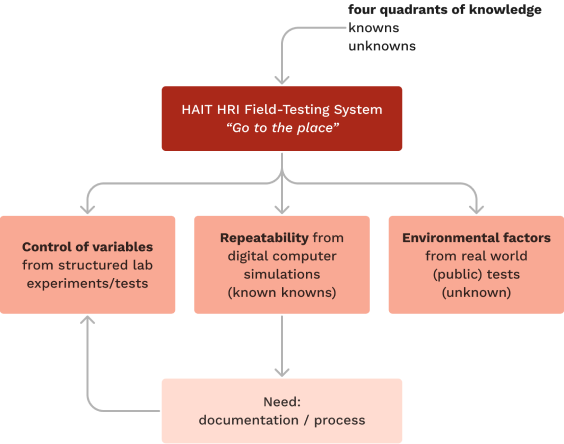

Designing a field test study involves considering variables like the examined value (e.g., trust), study contexts, and data collection methods.

Choosing between field testing and simulation-based testing depends on factors such as experiment repeatability and technology validation.

There's no unanimous agreement on when to use field testing, with researchers holding differing views.

Despite skepticism, field testing offers advantages, including access to diverse real-life scenarios and unexpected findings, enriching the dataset.

Both field testing and human-AI teaming studies employ varied metrics and data collection approaches, often requiring reference to prior research or custom methods.

Challenge Four

There is no existing metrics measuring HAIT

“What variables should be assessed when an AI system encounters a group of individuals?”

In Sprint 3, we conducted a series of expert interviews with 5 CMU faculty members, 1 CMU PhD student, and 4 HRI researchers. Our objective was to delve into the research process of human-AI teaming (HAIT) and uncover the common challenges researchers encounter while conducting field testing. Through these interviews, we aimed to better understand how our solution could effectively address the testing challenges within HAIT.

The knowledge we gained from this step was crucial in better informing our problem space. We understood the underlying rationale of the researchers but also shifted our mindspace of the problem space.

Above quote came from one of our participants. It underscored a common obstacle in contemporary HRI research: the lack of clearly defined metrics for evaluating HAIT performance.

Pilot Experiments

Building empathy with researchers

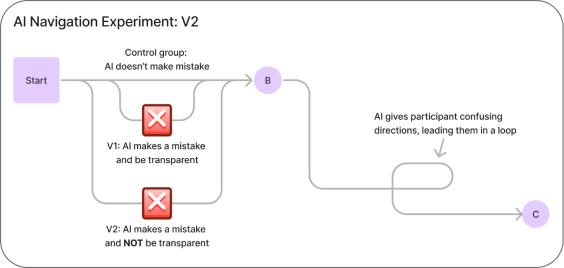

To better understand how researchers currently plan and conduct human-AI teaming research, we ran two of our own pilot experiments in human-AI teaming.

Drawing from the secondary research we had done on HAIT, we began to consider different variables and scenarios we could use for our studies.

To consider different use cases of AI, we split the studies into consumer-focused and productivity-focused experiments.

What we learned

Got a grasp of how the planning process may pan out for researchers.

Spent considerable time considering various research variables, similar to HRI stakeholders we spoke to.

We struggled to come up with ways to collect or measure data–we drew a lot from manual existing research review → helped us empathize with researchers.

Pivot Point

From these experiments, we were able to better empathize with researchers and their challenges, and came to the realization that it was more effective for us to focus our project not on the research itself, but on the flows and processes within research.

So, how might we facilitate field testing for HRI researchers studying human-AI teaming?

Smart Guide: Streamlining and connecting research

To facilitate field testing for HRI researchers studying human-AI teaming, we came up with the concept called the Smart Guide. Our team envisions the Smart Guide, in the form of a digital whiteboard researchers can utilize to:

Reduce researcher’s workload so they can devote more time towards research

Researchers input their weekly work progress into the Smart Guide research database, and the Smart Guide will facilitate production of research deliverables for other HRI stakeholders to use.

Establish standardized human-AI teaming measurements

By sharing findings, methods, and other figures in a weekly summary report to managers and colleagues, the Smart Guide would help standardize metrics by allowing future research to build off of past findings.

Connect researchers in different offices

While browsing literature in the database, researchers can quickly reach out to and connect with other researchers to communicate and collaborate together regarding relevant or future work.

Bring research and product closer

By providing important findings in digestible summaries directly to the product team, on a regular basis, Smart Guide could help bridge the gap between research and product at HRI.

How did we get here?

Concept Testing

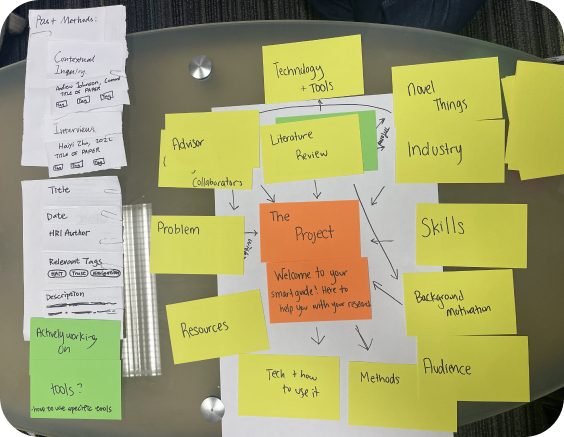

To validate if the concept of Smart Guide is beneficial to researchers, we conducted a series of concept testings with our paper prototype with 7 researchers in CMU.

Procedure

During the concept testing sessions, we asked researchers to recount their most recently completed research study using our model. Researchers engaged actively, customizing the card layout, adding or removing cards, and discussing their methodology.

After this process, we transitioned into a co-creation activity. We inquired about their biggest challenges during the study and asked them to connect those challenges to specific cards. Following this, we demonstrated example outputs our Smart Guide could produce, such as literature reviews.

Paper Prototype

Our Paper Prototype took the form of a set of notecards that included a variety of modules we believed to be relevant to a research project. We drew arrows to signify connections between modules.

Insights

Researchers have a diverse needs, but some aspects remain consistent for all:

Insight 1

All research starts at different places

Research projects are often born in different stages: some are continuing on past research, while others are exploring an entirely new domain.

Insight 3

Uncertainty needs to be accounted for

Due to the emerging nature of HAIT, some methods, metrics, or findings were so new that they’d not been validated by the other researchers.

Insight 2

Literature review happens in every stage

Sometimes, literature reviews would happen throughout the project. Many interviewees expressed difficulties parsing through dozens of papers, searching for specific articles or findings.

Insight 4

Outputs should include visuals such as diagrams, graphs, or figures

A picture, diagram, or table is worth a thousand words, and were often extremely helpful to interviewees.

From insights to implications

These interviews allowed us to validate our current model of the research process, identify new modules, and confirm the need for such an agent.

Ideally, this solution would:

1. Alleviate some of the cognitive load caused by redundant paperwork, such as setting up meetings with collaborators or other researchers

2. Remember current research being done, retrieve it and communicate it with the researcher when necessary

3. Nudge researchers to conduct field-testing when it would be appropriate

4. Document and compile their research to help publish papers and provide glanceable summaries HRI management

5. Account for diverse needs by valuing flexibility highly in our next iteration

Ideally, this solution would:

1. Alleviate some of the cognitive load caused by redundant paperwork, such as setting up meetings with collaborators or other researchers

2. Remember current research being done, retrieve it and communicate it with the researcher when necessary

3. Nudge researchers to conduct field-testing when it would be appropriate

4. Document and compile their research to help publish papers and provide glanceable summaries HRI management

5. Account for diverse needs by valuing flexibility highly in our next iteration