Related People

Aniket (Niki) Kittur, Brad Myers, Michael Xieyang Liu

CMU Researchers Develop Tool To Help Determine When To Reuse Content

Don't reinvent the wheel, the saying goes. But before using someone else's design, at least make sure it will be round.

The answers to most people's questions or the solutions to most challenges can be found within the seemingly endless content on the internet. The problem, however, lies in determining what content is good to reuse and what content should be tossed aside as refuse.

And often, validating a piece of found content or information takes longer than if someone were to just start from scratch.

Researchers from Carnegie Mellon University's Human-Computer Interaction Institute tackled this problem in a paper titled, "To Reuse or Not To Reuse?: A Framework and System for Evaluating Summarized Knowledge." The work received a Best Paper award at the 24th ACM Conference on Computer-Supported Cooperative Work and Social Computing last month.

"How do I trust the people who did the previous work? Are their sources credible? How much work did they do to complete the task? Is it up to date? This is information that would be helpful in making a decision about whether to use someone's work," said Michael Xieyang Liu, an HCII Ph.D. student and lead author of the paper.

In the paper, the researchers focused on computer programming, an area where people often borrow lines of code or information from others. HCII Professor Brad Myers, who co-advises Liu, said programming is now often done with a search engine window open next to the programming window. Most of the time, programmers rely on official documentation or well-known question-and-answer sites to help them with their code.

"But it is often the case that you go to a random site and determining whether to use what you find there is definitely a key problem for programmers," Myers said.

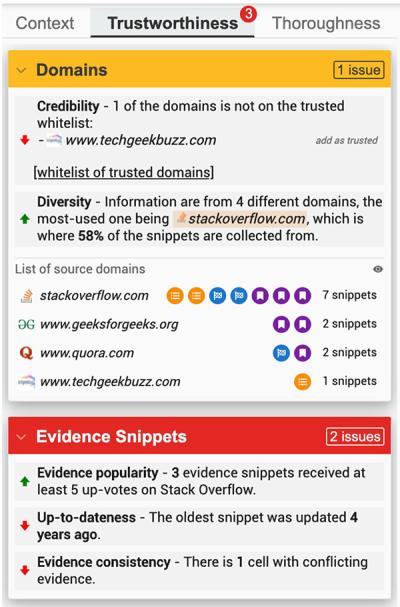

The researchers started by determining what information was important to developers as they evaluated whether to use content from someone else. They consolidated these into three key factors: context, trustworthiness and thoroughness. The researchers then looked for ways to gather this information automatically using metadata associated with the website such as programming language used, how many up-votes an article received and when the site was last updated. In addition, they leveraged information like the search queries that the author used, how often a piece of content showed up as a search result, and how long the author spent on the content to help developers assess these factors.

To display their data, they built a tool called Strata that presents metadata associated with these three factors in a sidebar, and alerts developers to any potential issues with reusing someone else's content. It is built on top of Unakite, a Chrome extension the team previously created to help developers collect, organize and understand complex information as they see it online.

Aniket "Niki" Kittur, a professor in the HCII and a co-advisor to Liu, said Strata is tweaked and tuned for developers, but the tool could easily be adapted to handle more general information. A person evaluating a trip report from Spain could consider how similar they are to the author (context); how popular the trip report is and how often it's cited or referenced (trustworthiness); and how much time the author spent on the report (thoroughness). The author could work inside Strata to provide some of the information, and other bits could be gleaned from the internet or metadata about the work.

"The importance of this problem is becoming increasingly apparent in almost any domain where people are trying to use something people have previously done," Kittur said. "On the one hand, it's a lot easier to reuse someone's work. But on the other hand, it could take you a long time to answer important questions about the work. That could end up taking you longer than simply doing that work yourself."

The researchers believe this is the first paper to both synthesize the information that people feel is important to collect and assess when evaluating whether to reuse content and to provide a tool for making those decisions. They hope the research will provide a framework for further work in this area and eventually lead to dashboards or other tools that people could use to collect relevant information and make reuse more efficient and effective.

"What if we could reuse all the work everyone is putting out there instead of redoing everything?" Kittur said.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu

Research Areas