PrISM: Procedural Interaction from Sensing Module

AI Assistants for Real-World Tasks

2024

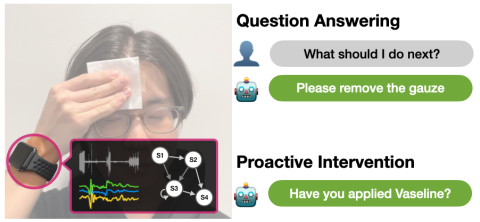

Our PrISM assistant uses multimodal sensors (e.g., a smartwatch) to understand the user state during procedural tasks and offers situated supports such as context-aware question answering and timely intervention to prevent errors.

There are numerous complex tasks in everyday life, from cooking to medical self-care, that involve a series of atomic steps. Properly executing these steps can be challenging, particularly for novices or those who are distracted. To address this, we have developed context-aware AI assistants that utilize multimodal sensors, including audio and motion sensors on a smartwatch, and privacy-preserving ambient sensors like Doppler Radar. These sensors are used for human activity recognition to gather contextual data about the user's actions, which enables the assistant to offer precise question-answering and timely interventions to prevent errors. We assess the effectiveness of this PrISM assistant across various scenarios, including supporting post-operative skin cancer patients in their self-care procedures to enhance their accuracy and independence.

Learn more about the family of PrISM work:

- PrISM-Tracker: A Framework for Multimodal Procedure Tracking Using Wearable Sensors and State Transition Information with User-Driven Handling of Errors and Uncertainty

- PrISM-Observer: Intervention Agent to Help Users Perform Everyday Procedures Sensed using a Smartwatch

- PrISM-Q&A (coming soon)

PrISM-Observer video

Researchers

Mayank Goel, Riku Arakawa

Research Areas