RealityReplay

Detecting and Replaying Temporal Changes In Situ using Mixed Reality

2023

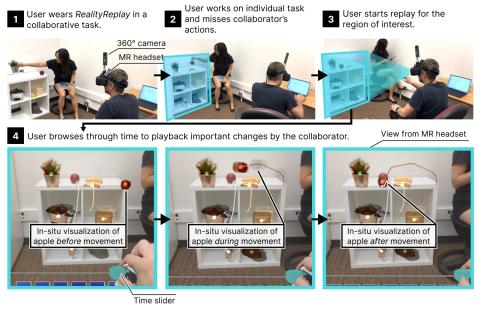

Scenario of a user working with the RealityReplay system.

Humans easily miss events in their surroundings due to limited short-term memory and field of view. This happens, for example, while watching an instructor's machine repair demonstration or conversing during a sports game. We present RealityReplay, a novel Mixed Reality (MR) approach that tracks and visualizes these significant events using in-situ MR visualizations without modifying the physical space. It requires only a head-mounted MR display and a 360-degree camera. We contribute a method for egocentric tracking of important motion events in users’ surroundings based on a combination of semantic segmentation and saliency prediction, and generating in-situ MR visual summaries of temporal changes. These summary visualizations are overlaid onto the physical world to reveal which objects moved, in what order, and their trajectory, enabling users to observe previously hidden events. The visualizations are informed by a formative study comparing different styles on their effects on users' perception of temporal changes. Our evaluation shows that RealityReplay significantly enhances sensemaking of temporal motion events compared to memory-based recall. We demonstrate application scenarios in guidance, education, and observation, and discuss implications for extending human spatiotemporal capabilities through technological augmentation.

Project Website

RealityReplay with the Augmented Perception Lab

Researchers

David Lindlbauer, Hyunsung Cho

Research Areas