SemanticAdapt

Optimization-based Adaptation of Mixed Reality Layouts Leveraging Virtual-Physical Semantic Connections

2021

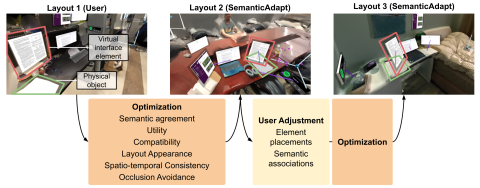

We present an optimization-based approach that automatically adapts Mixed Reality (MR) interfaces to different physical environments. Current MR layouts, including the position and scale of virtual interface elements, need to be manually adapted by users whenever they move between environments, and whenever they switch tasks. This process is tedious and time consuming, and arguably needs to be automated by MR systems for them to be beneficial for end users. We contribute an approach that formulates this challenges as combinatorial optimization problem and automatically decides the placement of virtual interface elements in new environments. In contrast to prior work, we exploit the semantic association between the virtual interface elements and physical objects in an environment. Our optimization furthermore considers the utility of elements for users' current task, layout factors, and spatio-temporal consistency to previous environments. All those factors are combined in a single linear program, which is used to adapt the layout of MR interfaces in real time. We demonstrate a set of application scenarios, showcasing the versatility and applicability of our approach. Finally, we show that compared to a naive adaptive baseline approach that does not take semantic association into account, our approach decreased the number of manual interface adaptations by 37%.

Project Website

https://augmented-perception.org/publications/2021-semanticadapt.html

Researchers

David Lindlbauer

Research Areas