Related People

Angel (Alex) Cabrera, Jason Hong, Ken Holstein, Adam Perer

CMU Researchers Release Zeno for Machine Learning Model Evaluation

Auditing Tool for AI Systems Receives Mozilla Technology Fund Support

A team of Carnegie Mellon University researchers has released a new interactive platform for data management and machine learning (ML) evaluation called Zeno.

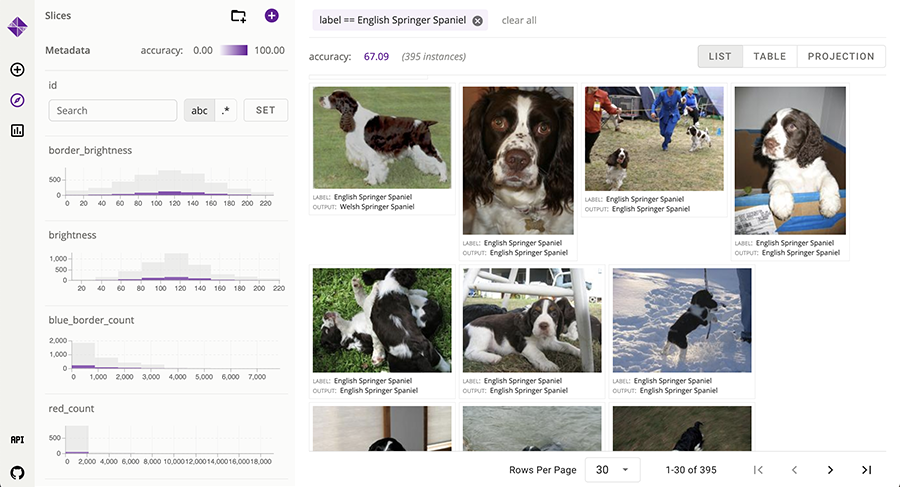

The Zeno framework combines a Python API with an interactive user interface (UI), which empowers its users to explore, visualize and analyze data and ML model performance across custom use cases. The project, led by Alex Cabrera, a Ph.D. student in the Human-Computer Interaction Institute at CMU's School of Computer Science, addresses a timely need among data science and ML practitioners for a tool to assist with the human-centered evaluation of their artificial intelligence (AI) systems.

Machine learning models are often evaluated with only a single overall metric like accuracy, which may not capture other important model behaviors, such as biases or safety issues. Because Zeno enables interactive model evaluation, it avoids these problems. It's helped users discover biases in a text-to-image generation model, for example, and systematic errors in a breast cancer classification model.

The Zeno tool recently received $50,000 from the Mozilla Technology Fund (MTF) as part of the 2023 Mozilla Technology Fund cohort, which aims to add accountability to an emerging and impactful area of tech — auditing tools for AI systems. By improving AI auditing tools and processes, Mozilla is supporting the expanding AI transparency space and investing in the tools needed to hold algorithms accountable.

As the Zeno team continues its work, they'll use the MTF funds to help make Zeno the go-to framework for model evaluation, filling the gap in existing tools that do not address all the real-world problems faced by today's ML practitioners.

"In the past, people have mostly tried to approach evaluation in a piecemeal way — one-off tools for specific issues like robustness of image classifiers or fairness for tabular classification," Cabrera said. "In Zeno, we took a look at those tools and came up with a general design pattern and system that we believe encompasses them all."

As a result, Zeno enables users to explore their data and model outputs with customizable views, evaluate the ML models, and create visualizations and reports to share outside of the system.

"New generative AI technology, as popularized by ChatGPT and DALL-E 2, has made it fairly easy to develop compelling AI-powered demos," said Ameet Talwalkar, an associate professor in the Machine Learning Department and a collaborator on the project. "However, we also know that this technology has limitations and is not always reliable, thus making evaluation a central problem for anyone wanting to turn a demo into a deployable application. Moreover, evaluation is necessarily a human-centric process, both in terms of defining success criteria and evaluating failure modes. There is a fundamental need for new human-computer interaction and machine learning tools and systems like Zeno to support this so-called behavior-driven AI process."

"One thing we saw with every machine learning development team we talked to is that aggregate metrics, like overall accuracy, just aren't enough," said Jason Hong, an HCII professor and collaborator on the project. "For example, these teams created test sets that should never fail under any circumstances, often things related to race, gender, religion or other sensitive topics. These teams also created special test sets for specific cases, for example 'people with glasses' or 'images with blue backgrounds,' because those slices of data were important but underperforming. Zeno makes it easier for machine learning teams to set up and support this kind of testing and evaluate their models beyond aggregate metrics."

The Zeno team will present their paper, "Zeno: An Interactive Framework for Behavioral Evaluation of Machine Learning," during this month's ACM Conference on Human Factors in Computing Systems (CHI 2023) in Hamburg, Germany. While this paper is the first public deliverable for the project, the team plans to publish follow-up work in the coming year.

In addition to Cabrera, Hong and Talwalkar, the paper's other authors include HCII faculty members Kenneth Holstein and Adam Perer; Erica Fu, a junior studying information systems at CMU; and Donald Bertucci, a junior studying computer science at Oregon State University who was a visiting HCII Research Experience for Undergraduate Program (REU) student last summer.

To learn more about Zeno, interact with a demo or reach out to Cabrera and the team.

Research Areas