Related People

Nesra Yannier, Ken Koedinger, Scott Hudson

HCII Researchers Receive $3M NSF Grant to Expand AI-Powered Intelligent Science Stations in Schools

A team of researchers from the Human-Computer Interaction Institute (HCII) in Carnegie Mellon University's School of Computer Science received a $3 million grant from the National Science Foundation to design interactive science experiences for students. The goal is to create engaging, inquiry-based science learning opportunities for young children in the classroom that sustain early interest.

The work will be led by principal investigator Nesra Yannier, a senior systems scientist in the HCII, with HCII professors Scott Hudson and Ken Koedinger as co-principal investigators. The NSF will provide funding over four years to develop four Intelligent Science Stations along with accompanying lesson plans, professional development for teachers, and online resources such as video demonstrations and learning materials. For this grant, the team will work with four school districts with a high percentage of underserved and diverse students, reaching thousands of children yearly.

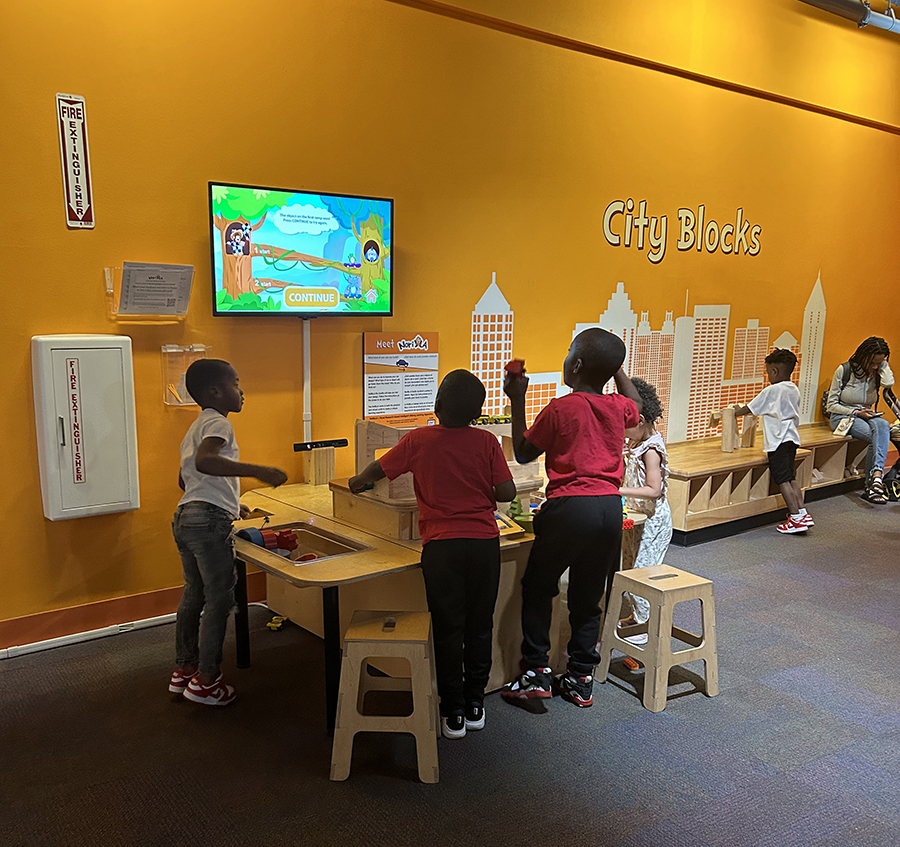

Intelligent Science Stations are interactive activities designed to provide students in kindergarten to fourth grade with hands-on science experiences that use an intelligent agent, artificial intelligence and computer vision to provide feedback and guide the students through the lessons. The agent appears as a fun, approachable animated character on the screen to work with the students. The systems offer support and feedback to students while helping teachers enhance their lessons.

The team will design Intelligent Science Stations and accompanying curriculum spanning a wide variety of STEAM concepts including balance and stability, forces and motion, levers and proportional reasoning, projectiles, and simple machines — all aligned with national standards. The stations will also highlight inquiry and engineering design.

The project builds on a mixed-reality platform that Yannier and colleagues created called NoRILLA. The AI-powered mixed-reality learning platform combines physical and virtual worlds to improve children's inquiry-based science, technology, engineering and math learning. An AI gorilla leads users through experiments with items like an earthquake table and blocks or ramps. The gorilla prompts them to make predictions, explains the results and leads the users through different challenges with interactive feedback and AI guidance. The technology uses computer vision to track what users are doing in the physical world and explains concepts to help inform their decisions throughout the experiment.

In a study published in Science, the NoRILLA team found that U.S. children who encountered hands-on exhibits with this added AI interaction were four times more engaged than those who did not use the AI. They also found that the AI-based mixed-reality system bridging physical and virtual worlds improved children's learning by five times compared to equivalent screen-based tablet or computer games.

The team received $2.3 million from the NSF in 2020 to work on NoRILLA intelligent science exhibits in collaboration with museums and science centers across the country.

For More Information

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu

Research Areas