Related People

Sherry Tongshuang Wu, Jeffrey Bigham, Sara Kingsley, Wesley Hanwen Deng, Motahhare Eslami, Ken Holstein, Jason Hong, Hong Shen, Steven Moore, John Stamper

HCII at HCOMP 2024

"Responsible Crowd Work for Better Artificial Intelligence (AI)” was the theme of the 12th annual AAAI Conference on Human Computation and Crowdsourcing (HCOMP) hosted here in Pittsburgh from October 16-19, 2024.

“I’m thrilled that HCOMP was held in Pittsburgh this year,” said Sherry Tongshuang Wu, HCII assistant professor. “Carnegie Mellon University has a strong history in human computation and crowdsourcing research, with many HCII researchers deeply engaged in exploring the evolving relationship between humans and AI in these fields.”

“In fact, Pittsburgh last hosted HCOMP in 2014, with Jeff Bigham serving as conference chair. Now, as AI systems increasingly replicate crowd work, collaborate in complex workflows, and tackle tasks resembling human labor, it feels especially meaningful to bring the conference and its community back to Pittsburgh a decade later for another round of in-depth discussions,” said Wu.

Faculty Wu and Bigham served as local HCOMP 2024 conference co-organizers, and the larger HCII community also contributed to the event as researchers, organizers, and volunteers. HCII authors contributed to a total of two accepted papers, two works-in-progress, and participated in the organization of two workshops, all listed below.

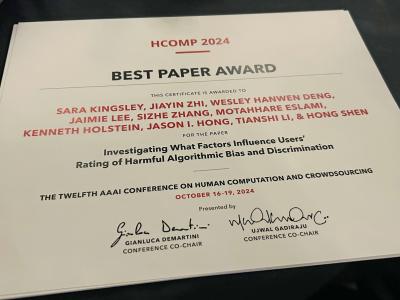

A Carnegie Mellon University team received the only Best Paper Award at HCOMP 2024 for their work on “Investigating What Factors Influence Users' Rating of Harmful Algorithmic Bias and Discrimination.”

The two works-in-progress started as undergraduate projects with the HCII REU program. Wesley Hanwen Deng, HCII PhD student and mentor to the REU students, said, “I had a great time mentoring two REU students, Claire Wang and Matheus Kunzler Maldaner, over the past summer, and I’m very proud of them for attending their first conference and presenting their ongoing research on tools and processes to support users in auditing generative AI systems.”

The downtown Pittsburgh, PA location enabled HCOMP to be co-located with the UIST conference. Overlapping with the final day of UIST promoted collaboration opportunities across HCI disciplines.

__________

Accepted papers with HCII contributing authors

![]() Best Paper Award Investigating What Factors Influence Users' Rating of Harmful Algorithmic Bias and Discrimination

Best Paper Award Investigating What Factors Influence Users' Rating of Harmful Algorithmic Bias and Discrimination

Sara Kingsley, Jiayin Zhi, Wesley Hanwen Deng, Jaimie Lee, Sizhe Zhang, Motahhare Eslami, Kenneth Holstein, Jason I. Hong, Tianshi Li, Hong Shen

There has been growing recognition of the crucial role users, especially those from marginalized groups, play in uncovering harmful algorithmic biases. However, it remains unclear how users’ identities and experiences might impact their rating of harmful biases. We present an online experiment (N=2,197) examining these factors: demographics, discrimination experiences, and social and technical knowledge. Participants were shown examples of image search results, including ones that previous literature has identified as biased against marginalized racial, gender, or sexual orientation groups. We found participants from marginalized gender or sexual orientation groups were more likely to rate the examples as more severely harmful. Additional factors affecting users’ ratings included discrimination experiences, and having friends or family belonging to marginalized demographics. These results carry significant implications for the future of AI security and safety. They highlight the importance of cultivating a diverse workforce while simultaneously reducing the burden placed on marginalized workers to identify AI-related harms. This can be achieved by adequately training non-marginalized workers to recognize and address these issues.

Assessing Educational Quality: Comparative Analysis of Crowdsourced, Expert, and AI-Driven Rubric Applications

Steven Moore, Norman Bier, John Stamper

Exposing students to low-quality assessments such as multiple-choice questions (MCQs) and short answer questions (SAQs) is detrimental to their learning, making it essential to accurately evaluate these assessments. Existing evaluation methods are often challenging to scale and fail to consider their pedagogical value within course materials. Online crowds offer a scalable and cost-effective source of intelligence, but often lack necessary domain expertise. Advancements in Large Language Models (LLMs) offer automation and scalability, but may also lack precise domain knowledge. To explore these trade-offs, we compare the effectiveness and reliability of crowdsourced and LLM-based methods for assessing the quality of 30 MCQs and SAQs across six educational domains using two standardized evaluation rubrics. We analyzed the performance of 84 crowdworkers from Amazon's Mechanical Turk and Prolific, comparing their quality evaluations to those made by the three LLMs: GPT-4, Gemini 1.5 Pro, and Claude 3 Opus. We found that crowdworkers on Prolific consistently delivered the highest quality assessments, and GPT-4 emerged as the most effective LLM for this task. Our study reveals that while traditional crowdsourced methods often yield more accurate assessments, LLMs can match this accuracy in specific evaluative criteria. These results provide evidence for a hybrid approach to educational content evaluation, integrating the scalability of AI with the nuanced judgment of humans. We offer feasibility considerations in using AI to supplement human judgment in educational assessment.

__________

HCOMP Workshops

Two of the three workshops offered at HCOMP had HCII faculty and students on the organizing teams.

Workshop: Responsible Crowdsourcing for Responsible Generative AI: Engaging Crowds in AI Auditing and Evaluation

Wesley Hanwen Deng, Hong Shen, Motahhare Eslami, Ken Holstein

This workshop brings together leading experts from industry, civil society, and academia to discuss a future research agenda for designing and deploying responsible and effective crowdsourcing pipelines for AI auditing and evaluation. The CMU team led discussions to explore the challenges and opportunities of engaging diverse stakeholders in auditing and red-teaming generative AI systems.

Workshop: Participatory AI for Public Sector

Motahhare Eslami, John Zimmerman

__________

Works-In-Progress with HCII contributing authors

Designing a Crowdsourcing Pipeline to Verify Reports from User AI Audits

Wang Claire, Wesley Hanwen Deng, Jason Hong, Ken Holstein, Motahhare Eslami

MIRAGE: Multi-model Interface for Reviewing and Auditing Generative Text-to-Image AI

Matheus Kunzler Maldaner, Wesley Hanwen Deng, Jason Hong, Ken Holstein, Motahhare Eslami

__________

Research Areas